Swijj

Swijj is a dictionary of hypothetical slang words: some of them may already be real, some may yet to have come into use. This project is a playful look at how language evolves, as well as a reflection on attempts at gatekeeping speech. Words are forever coming and going, and the job of the Dictionary is to describe their use, not to prescribe which are valid.

The project was inspired by looking up the word rizz in Merriam-Webster and realizing it’s just a derivation of charisma (Take kə-ˈriz-mə and drop the first and last syllable, leaving the primary stress of the word).

Each page includes the constructed word, a generated definition, and the original word it is derived from. Users can click “new word” to randomly cycle through a seemingly endless stream of such words.

This project was created during Connections Lab for IMA Low Res at NYU.

Example Words

Here are a few of my favorite words from Swijj (click the link to see the meaning):

Process

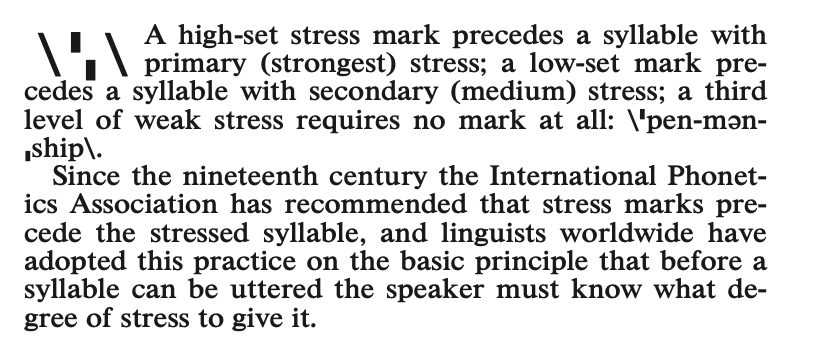

First, a random English word is selected. Then, I fetch its pronunciation from Merriam-Webster’s API and parse the specialized syntax based on their pronunciation guide.

The pronunciation string is parsed into a list of syllables tagged by stress level, with each syllable being split into a list of vowels and consonants.

ruststatic STRESS_PRIM: char = 'ˈ';

static STRESS_SEC: char = 'ˌ';

enum Stress {

Primary(Syllable),

Secondary(Syllable),

Unstressed(Syllable),

}

syllables.map(|s| {

if s.starts_with(STRESS_PRIM) {

let str = s.trim_start_matches(STRESS_PRIM);

Stress::Primary(get_syllable(str))

} else if s.starts_with(STRESS_SEC) {

let str = s.trim_start_matches(STRESS_SEC);

Stress::Secondary(get_syllable(str))

} else {

Stress::Unstressed(get_syllable(s))

}

})

Using Rust pattern matching, I can drop or modify syllables based on rules for different stress patterns. For example, when the word contains a single middle stressed syllable, I generate a word by dropping the first and last syllables. Or I might add a random consonant to the beginning of the word.

rustfn make_slang(syllables: Vec<Stress>) -> String {

match &syllables[..] {

[Stress::Unstressed(_), Stress::Primary(stressed_syllable), Stress::Unstressed(_)] => {

make_word(stressed_syllable)

}

[Stress::Primary(_), .., Stress::Secondary(s)] => {

// match if syllable starts with consonant or vowel

match &s[..] {

[Sound::Consonant(con), tail @ ..] => {

// replace first consonant

let new_consonant = random_consonant(con);

make_word(&concat_sounds(&[new_consonant], tail))

}

_ => {

let new_consonant = random_consonant("");

make_word(&concat_sounds(&[new_consonant], s))

}

}

}

}

}

The new list of syllables is then converted back to a string of letters to spell a new word. Note that because this is based on the pronunciation symbols (and English spelling is unpredictable), the new spelling will often diverge from the spelling of the original word. Here is a snippet of some of those spelling rules:

rustmatch sound {

Sound::Vowel(s) => match s.as_str() {

"ä" => word.push('o'),

"ē" => word.push_str("ee"),

"ȯ" => word.push('o'),

"ü" => word.push_str("oo"),

"ȯi" => {

sounds.next();

if sounds.peek().is_some() {

word.push_str("oi");

} else {

word.push_str("oy");

}

}

}

}

Finally, based on the new slang word, the original word, and its part of speech, GPT-4 is used to generate a snappy 4-5 word definition.

rustasync fn generate_definition(

slang: &str,

origin: &str,

label: &str,

) {

let request = CreateChatCompletionRequestArgs::default()

.max_tokens(64u16)

.model("gpt-4")

.messages([

system("You are a helpful assistant inventing definitions for new slang words. Each should be 5 words or less."),

user("\"swijj\" (noun) derived from \"language\""),

assistant("sleek and styling language"),

user(&format!("\"{}\" ({}) derived from \"{}\"", slang, label, origin)),

])

.build()?;

// ...

}

I ran this script locally on MIT’s 10,000-word list to produce a large dataset of generated slang words ready to be displayed on the website.

Web Design

The website is a simple Next.js app with a single page dedicated to each word. I used Next’s great static caching and server-side rendering to make the site feel fast and not require any client-side JavaScript to run.

Although the words presented are ridiculous, I wanted the design to feel serious and authoritative, like a formal dictionary. The site is typeset in Caslon, an old-style serif typeface that has been used in formal documents, fine book printing, and journals for 300 years. To futher this formal and classic tone, I made sure to enable OpenType features for discretionary ligatures.

cssh1 {

font-feature-settings: 'kern', 'liga', 'clig', 'calt', 'dlig', 'swsh';

font-variant-ligatures: discretionary-ligatures;

}

I kept the visual design as minimal as possible, with a monochromatic color scheme. However, I created multiple color palettes which vary across pages to add some visual interest, as well as to reflect the variable nature of the words.

To juxtapose the formal tone, a random snarky remark is displayed in the corner of each page, ironically expressing disbelief at the word’s own inclusion.

Because I’m hoping people will want to share their word discoveries, I wanted to have good Open Graph support for link previews. I decided to try Vercel’s HTML-to-image engine Satori, which also integrates seamlessly with Next.js edge functions. I used it to generate a simple image for each word page, which is called on-demand when the page is shared. Unfortunately, Satori does not support OpenType features, so there are no ligatures in the link preview images.

![[object Object]](/_next/image?url=%2F_next%2Fstatic%2Fmedia%2Fog.011d5d70.png&w=3840&q=75)

Reflections

When I started this project, I didn’t anticipate that I would be coding a lexer and parser, but that’s what was needed to be able to manipulate the pronunciation symbols into my desired forms. I primarily modeled these rules off of the slang terms currently being coined by Gen Z, but I could have also added slang patterns for prior generations (e.g. “-izzle”). Even though these words are inventions, they may eventually appear outdated, so in the future, I might add new rules to generate slang patterns yet to be.

The biggest challenge was compiling the stream of pronunciation symbols back into a word of English letters. I ended up revising the code for this and re-computing the spellings of all the words in my dataset because I wasn’t satisfied with how they read. The rules are still quite simple, so there’s many cases where the spelling doesn’t match how an Enligh speaker would expect it to be written, but I think they work well enough for the majority of cases. To create more realistic spellings, I would have to implement an LL(k) parser that looks ahead to the next few symbols, as well as handle many, many exceptions—this is starting to scarily sound like a full-fledged programming language’s compiler, so perhaps I will leave this to my PhD dissertation!

Languages shape the way we think, so it’s only right that people can leave their mark on language too. Policing which forms of language are valid is often used as an exertion of power, but propagating slang and vernacular can be a way for groups to reclaim that power.